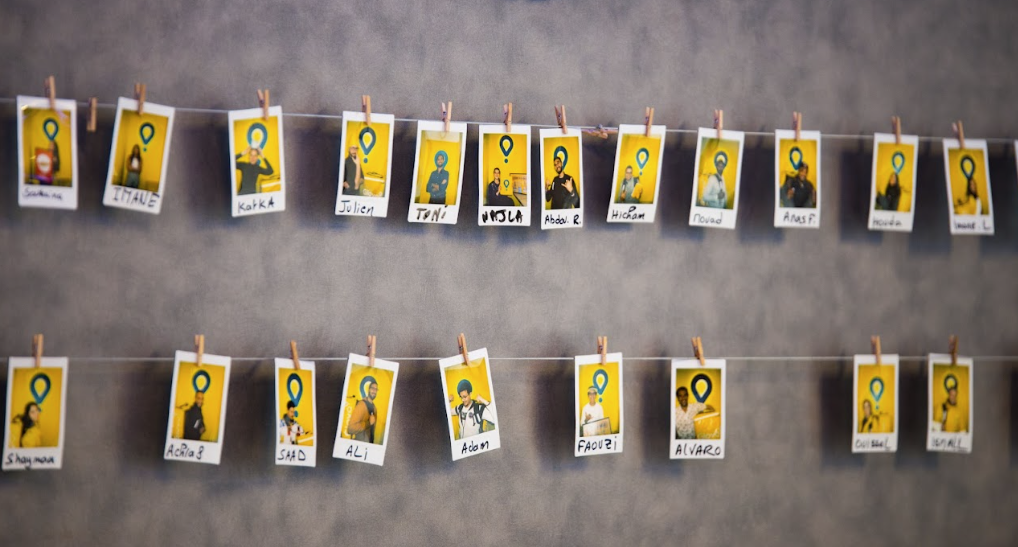

Meet the crew

It doesn’t matter where you come from and what team you join. We will walk you through your entire experience of working at Glovo and support you to do your best work.Our latest stories

Your ride of discovery starts here

October holds a special place in our hearts for various reasons. It's a time when we come together to celebrate diversity in all its forms – cultural, linguistic, religious, ethnic, and racial. It's like a whirlwind of cultural festivities that unite us.

October holds a special place in our hearts for various reasons. It's a time when we come together to celebrate diversity in all its forms – cultural, linguistic, religious, ethnic, and racial. It's like a whirlwind of cultural festivities that unite us.

Meet the team! #Glovo Engineering is all about the people and opportunities 🚀

From boosting your mental health to creating inclusive workplaces, coming out has the power to transform lives. Get ready to wave your rainbow flags high as we dive into the rainbow ride that is coming out and get into tips for fostering an environment of belonging.

Sophia, a Glovo employee who was forced from her home in Kyiv, arrived in Barcelona in April 2022. Far from home and in an unthinkable situation, she shares her story and reflections of her journey thus far with us.

Our Employee Resource Groups (ERGs) are a powerful tool for promoting inclusion and diversity within Glovo. The ERG “Abilities @ Glovo” provides a space for employees with disabilities - and allies - to connect, advocate for change, create awareness and serve as a valuable partner for feedback on how to better support and accommodate the community. Learn how they are positively impacting on creating a more inclusive and equitable workplace.

Ask Glovo

Our vision is to give everyone easy access to anything in their city. We also want to give you all the answers about Glovo.-

You will receive all the information and credentials the Friday before you start.

-

We call them technical interviews because these are interviews with the hiring team that will focus on more concrete and deep aspects of the role.You don’t need to prepare anything in advance! Just expect some situational questions related to your experience and the role in question.

-

We love jet-setters! Depending on the role and country, we may sponsor VISAs, check with your recruiter for more information.

-

Depending on the role, we may offer relocation assistance, check with your recruiter for more information.

-

We don´t offer remote work opportunities. we do however offer the possibility for our employees to work from home up to 2 days per week. and we balance high peaks of work with flexible time off and the possibility to work from abroad 3 weeks in the year.

-

Absolutely! You’re more than welcome to apply for another position if you feel like you’ve gained the right skills and experience.

-

At Glovo we aim to provide applicants with feedback as soon as possible, but due to a high volume of application submissions, it may take some time.

-

Our business cases are in the final step of the recruitment process. They are practical ways for us to assess how creative and qualified you are! We don’t have templates, our hiring managers whip them up for each position.

The Business Case stage is a challenging step but follows two directions: for the candidate to see the practical side of the job, to know if it is what they are currently looking for, and for us to know how they approach different challenging tasks that will be part of their job.

-

Don’t worry! We post new positions each week, on a rolling basis. Keep checking back for more openings and subscribe to our emails.

-

You can apply to as many positions as you like! Just make sure you have the right skills and experience and you’re good to go. Search here.